SaaSletter - Is AI An Abject Disaster?

Plus our Cloud Ratings B2B AI Interest Index for October 2025

Scale AI Remote Labor Index →Is AI An Abject Disaster?

Since I’ve been regularly consuming examples of AI productivity gains - whether SaaStr or S&P500 public companies, more on both below - I’ve assumed any headline numbers from any AI benchmarking charts are similarly positive: “A 70.0 on a legal benchmark report surely means AI lawyers are 70% as good as humans, right? That seems pretty good.”

Using Scale AI’s SEAL LLM Leaderboards, we translated 3 different studies into approximate comparisons to their human equivalent by domain:

Unsurprisingly, coding scores well. With Finance and Legal OK(?) since we are early(?) in the AI cycle. The drop-off from All Questions (including Easy) to Hard Questions is meaningful across all 3.

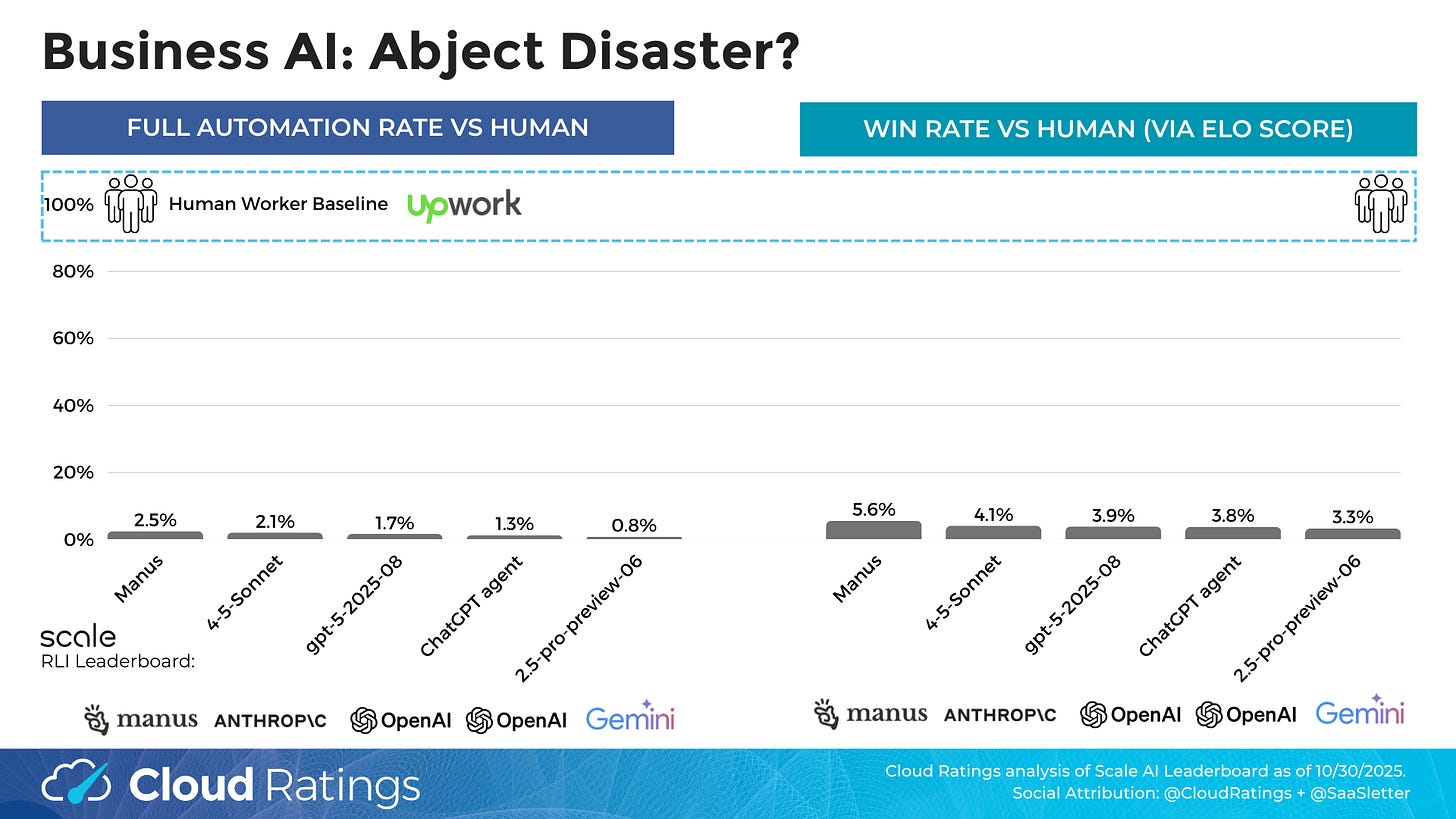

A fascinating new study from the Center for AI Safety and Scale AI caught my eye: the Remote Labor Index, which benchmarks AI against the performance of experienced freelancers on UpWork across 240 projects.

The freelancer vs AI projects:

Spanned from video, CAD, graphic design, game development, audio, architecture, and more.

Mean Human Completion Time: 28.9 hours (Median: 11.5 hours)

Average Project Value: $632.60 (Median: $200)

Implying hourly rates of $17-$22/hr

The project success rates were surprisingly low: 0.8%-2.5%! Particularly in light of the Elo scores (Elo Wikipedia entry) as high as 509, which I, as a non-chess player, assumed implied a ~51% capability versus humans.

Relative to what’s “priced in” and the sheer amount of capital into AI, these success rates surely are an abject disaster?

Why are the headline success numbers so low? The Remote Labor Index measures Full Automation as: “The percentage of projects where an AI’s deliverable is judged at least as good as the human standard. This is the primary success metric, defined as whether a ‘reasonable client’ would accept the work.”

The critical failure driver: any 10+ hour project requires multiple steps. Therefore, any performance gap versus humans compounds. Especially if unsupervised.

Taking this (maybe blindingly obvious) insight back to the Scale leaderboards for other business AI domains, seemingly OK headline capabilities collapse once a multiple-step assumption is applied. With 5 and 10 step calculations applied here:

At 10 steps, AI Coding is 85% worse than human developers. While Finance AI and Legal AI yield 99.8% worse results once 10 steps are involved!

Caveat: This is a brute and mechanical calculation. Nor does it have an ounce of the sophistication of research benchmarks (the RLI paper involved 48 authors!).

What Does This Success Rate Math Mean?

My immediate reaction: these near-zero AI success rates are damning to the “Software Is Dead” / “End of Software” (Chris Paik 2024 Google Doc) take.

At a more philosophical level, the success results reminded me of the “AI gives you infinite interns” framing (see Benedict Evans’ November 2025 “AI Eats The World”).

Teasing out the analogy: can interns truly help with easy work (like moving logos around or taking meeting notes)? For sure. How often would an intern complete a 10-hour project (for an experienced professional) at an experienced professional’s quality? Very rarely. Taking into account any manager costs (like assigning or reviewing the project), those intern projects destroy value.

Compared to the latest Scale AI benchmarks + math, the intern framework still holds up.

As an aside, the *infinite* aspect is alluring and well captured by Benedict Evans’ 1800s steam-engine analogy. Anything *at all positive* multiplied by *infinity* is wildly attractive and worth trying to get right.

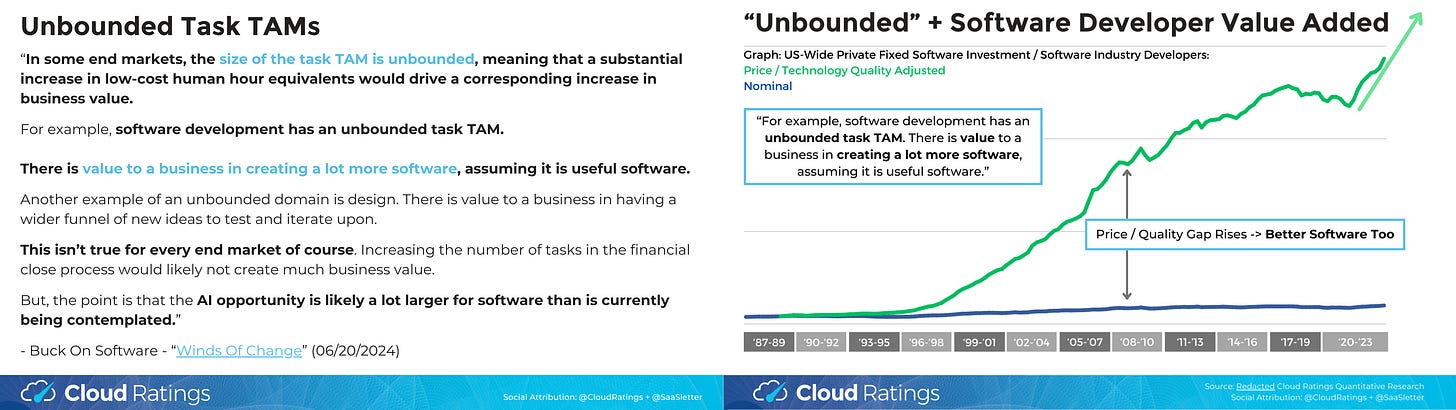

All of which aligns with Buck on Software’s 2024 “Winds of Change” and its key concept of unbounded task TAMs… so long as it is useful software.

Our macroeconomic work at Cloud Ratings on software developer value added (slides) sets a more encouraging precedent for AI: like software developers, AI interns will be better utilized over time, especially in terms of price/quality.

Bad Benchmarks But Real World AI Successes: SaaStr + S&P500

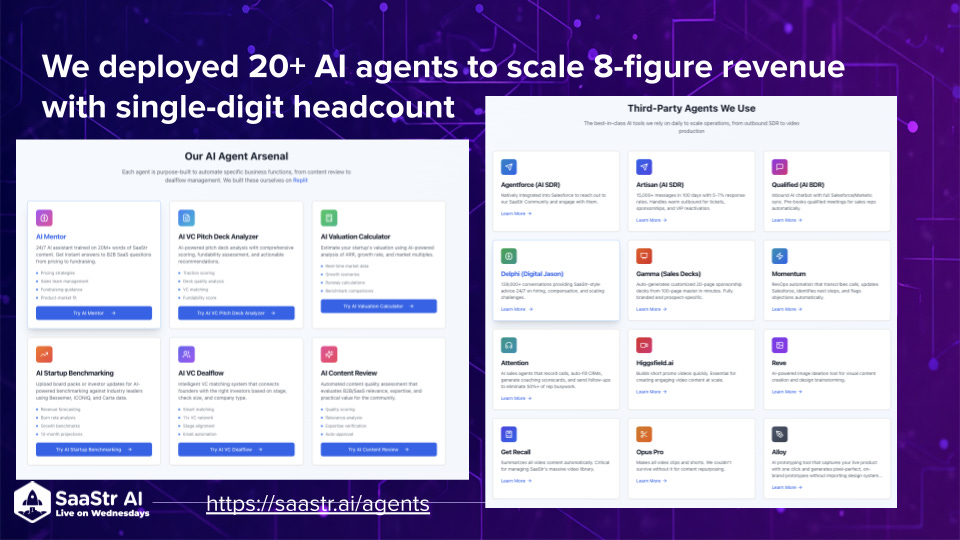

Tracking the progress SaaStr (Amelia Lerutte + Jason Lemkin) has made internally with their implementation of AI agents has raised my expectations of what is possible in the real world… with hard human work to get those AI results.

SaaStr recently hosted a remarkably transparent workshop (SLIDES | VIDEO) on their AI agent learnings. Seriously, go watch it.

A key theme of “constant calibration + daily oversight” certainly aligns with the Scale benchmarks and the “infinite interns” concept.

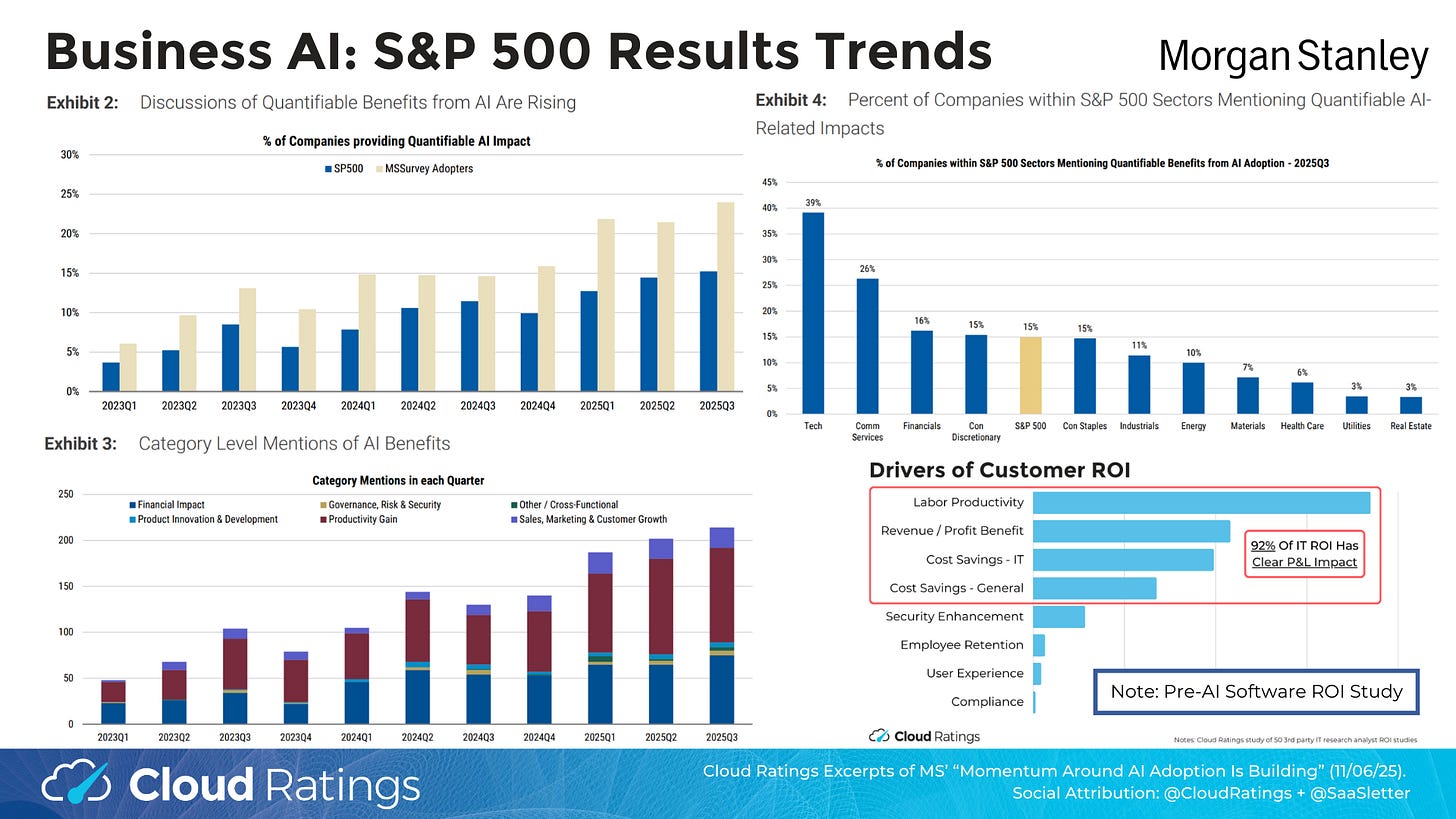

The growing volume of AI success disclosures from S&P 500 components (via Morgan Stanley, 11/06/25) also serves as a counterpoint to any “AI = abject disaster” conclusions:

Of course, relative to what’s priced in for AI, perhaps 15% of the S&P500 disclosing AI gains is too low? Nor is it broad-based enough, with only mid single-digit disclosure rates from real-world segments like Materials and Health Care (versus a 39% disclosure rate in Tech broadly, 59% for S&P500 Software & Services)?

Of note, the mix of ROI drivers between AI and pre-AI software (per Cloud Ratings’ “Drivers of Software ROI” and “IT ROI” studies) is very consistent.

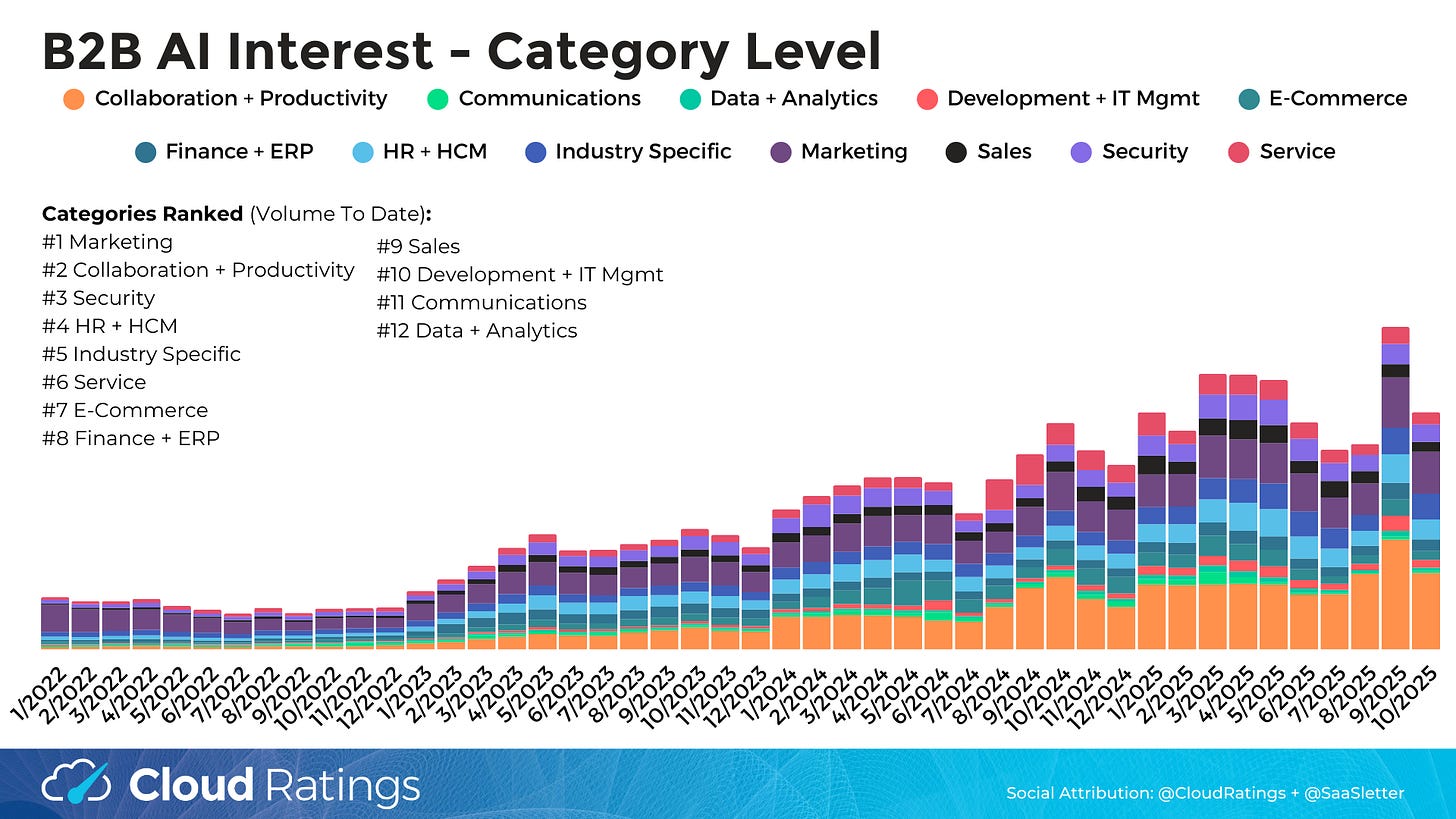

October 2025 B2B AI Interest Index from Cloud Ratings

We’ve updated our Cloud Ratings B2B AI Interest Index through October 2025 - full slides below:

Thematic Category Interest (n = 47 sub-categories tracked - i.e. “manufacturing AI” or “supply chain AI”) declined sequentially versus a strong September, though above its summer lull.

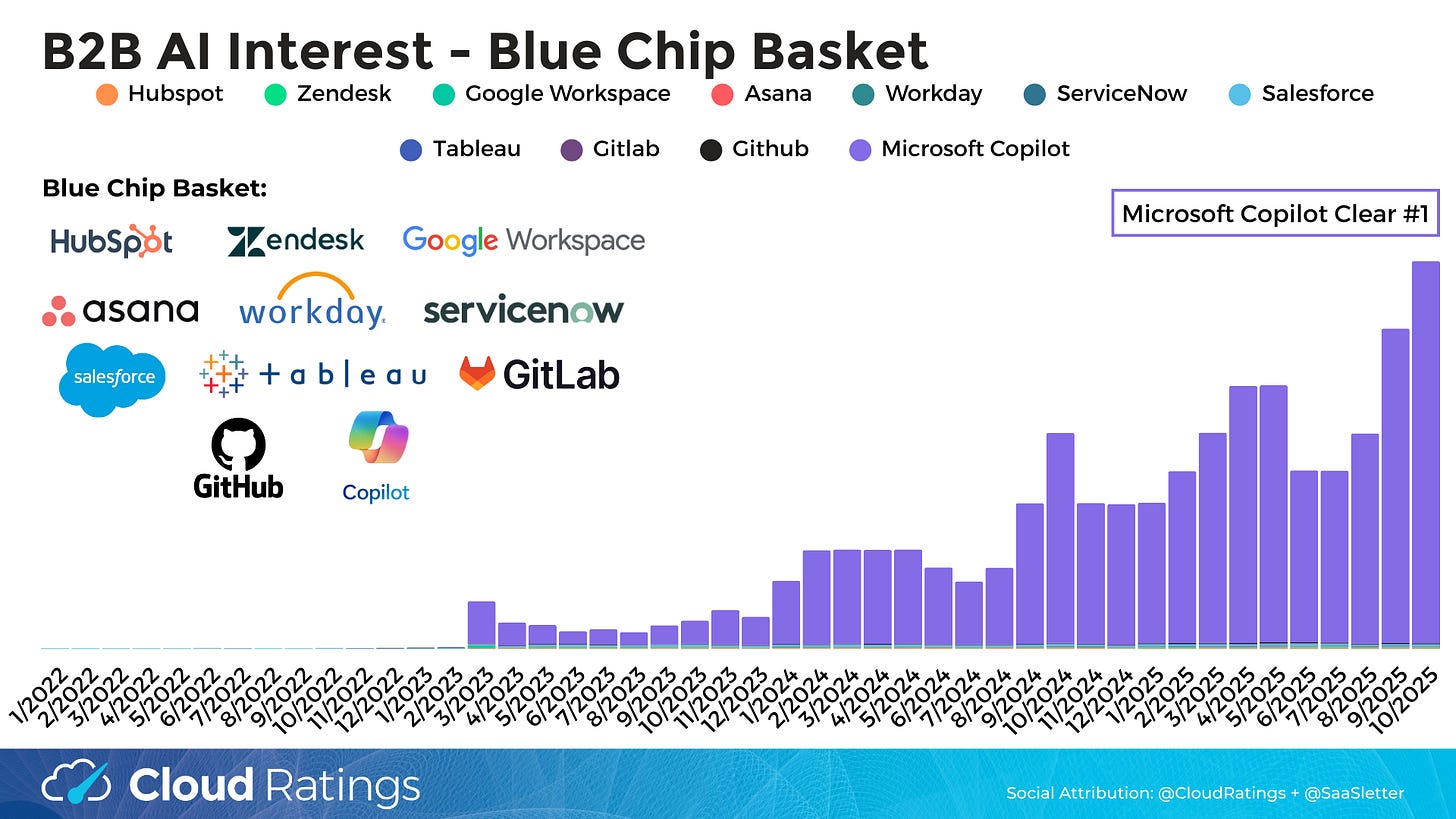

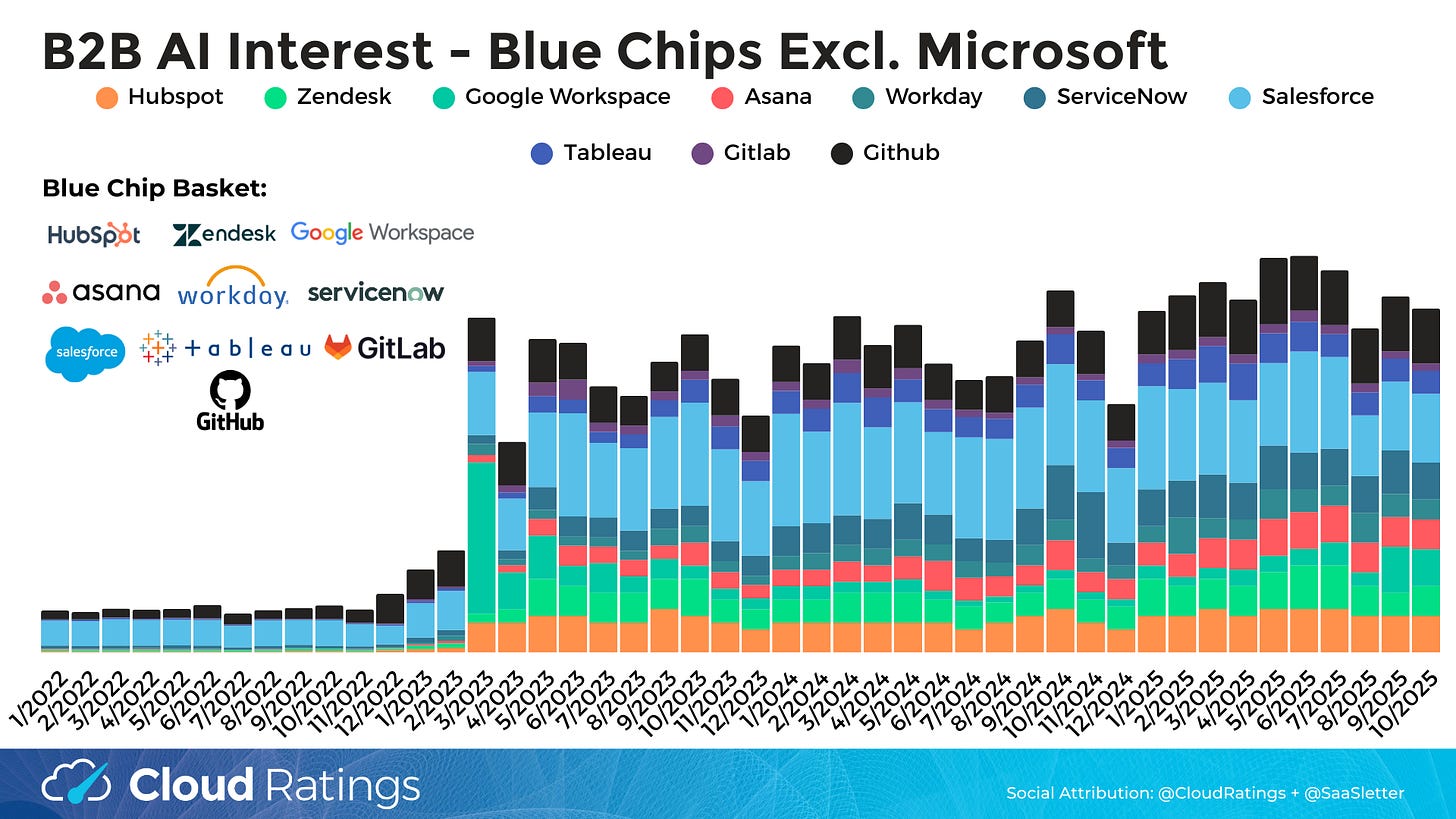

Bellwether Microsoft Copilot continued its acceleration. Other “Blue Chips” (i.e., Hubspot, ServiceNow) remain range-bound at best and small in absolute terms.

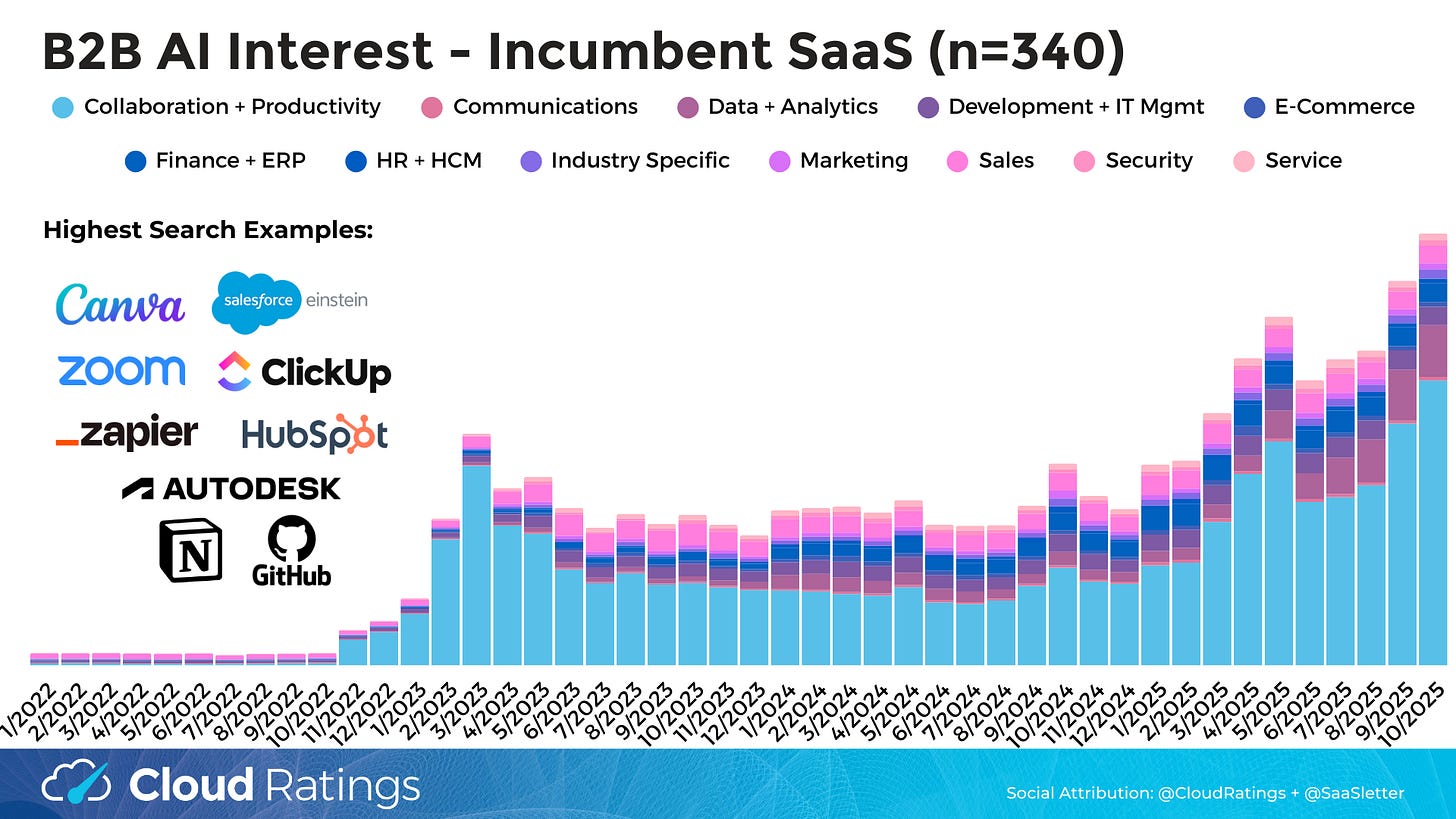

SaaS Incumbents (n=340: the same 340 vendors tracked in our top-of-the-funnel focused, forward-looking SaaS Demand Index) have seen a notable 2025 breakout relative to a very range-bound 2024. With October accelerating:

Podcast With Godard Abel Of G2

FULL EPISODE: VIDEO | AUDIO: Apple Podcasts | Spotify | All Platforms

Our recent AI-oriented podcast with Godard Abel (CEO of G2) complements much of this newsletter edition.

About Cloud Ratings

In mid-2024, we announced a research partnership with G2 - more here:

with this slide showing how our G2-enhanced Quadrants (like our recent Sales Compensation Software) release, this business of software newsletter you are reading, our podcasts, and our True ROI practice area all fit within our modern analyst firm:

Fascinating. I’ve long hypothesized that people are dramatically overstating not whether, but how soon, AI will cause the productivity gains that are priced in.