SaaSletter - AI Model Progress

Is B2B AI ROI High Enough From Current Models?

This week’s edition was set to cover ARR/ACV per customer, primarily to give early-stage Founders context to work backward from as they think through pricing strategy. But AI news pre-empted → more soon.

Slowing AI Model Progress

“OpenAI Shifts Strategy as Rate of ‘GPT’ AI Improvements Slows” from The Information’s Stephanie Palazzolo, Erin Woo, and Amir Efrati has attracted a ton of attention.

My key excerpts:

Hitting a Data Wall: One reason for the GPT slowdown is a dwindling supply of high-quality text and other data that LLMs can process during pretraining to make sense of the world and the relationships between different concepts so they can solve problems such as drafting blog posts or solving coding bugs, OpenAI employees and researchers said.

In the past few years, LLMs used publicly available text and other data from websites, books and other sources for the pretraining process, but developers of the models have largely squeezed as much out of that type of data as they can…

and - to me - alarmingly a 10x increase in cost per query for certain compute-heavy approaches:

That means the quality of o1’s responses can continue to improve when the model is provided with additional computing resources while it’s answering user questions, even without making changes to the underlying model. And if OpenAI can keep improving the quality of the underlying model, even at a slower rate, it can result in a much better reasoning result, said one person who has knowledge of the process.

“This opens up a completely new dimension for scaling,” Brown said during the TEDAI conference. Researchers can improve model responses by going from “spending a penny per query to 10 cents per query,” he said.

Slowing AI Model Progress → *B2B* AI Read Through - ROI

First, this newsletter does not provide investment advice. Moreover, our coverage focuses on B2B applications of technology, whether software or AI in B2B contexts. The following opinions have no bearing on AI infrastructure/hardware or AI B2C trends/investment cases. Always do your own work.

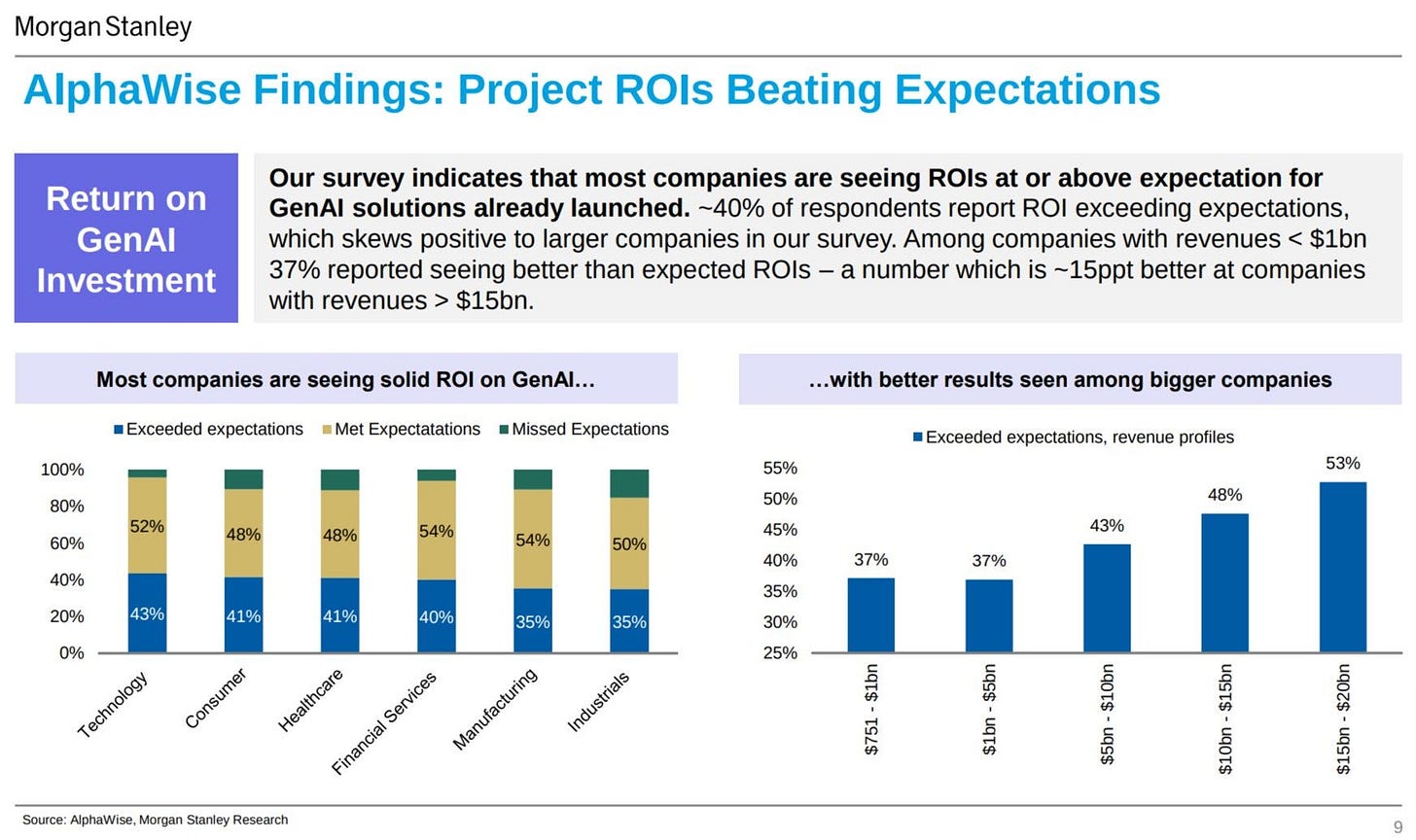

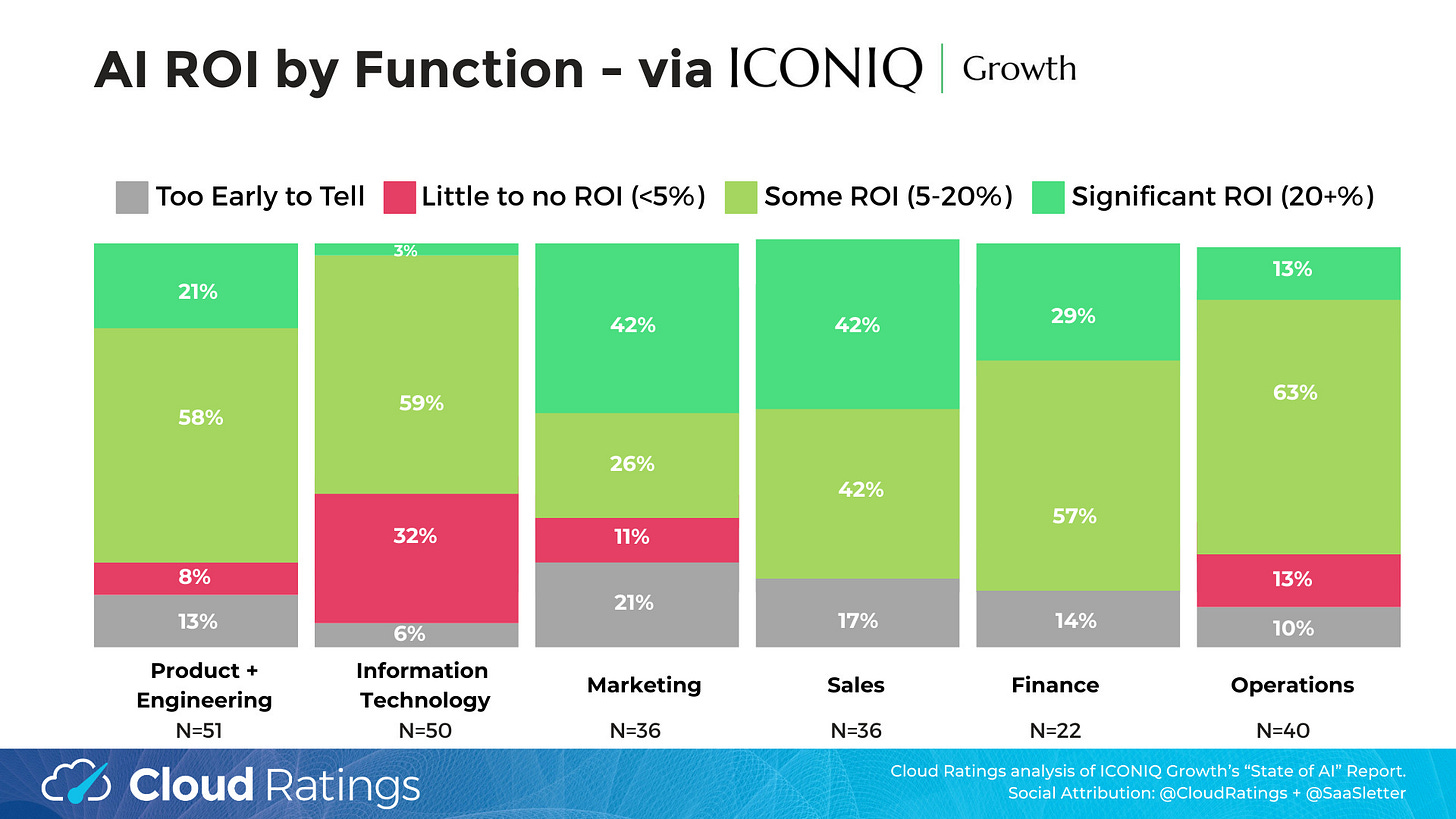

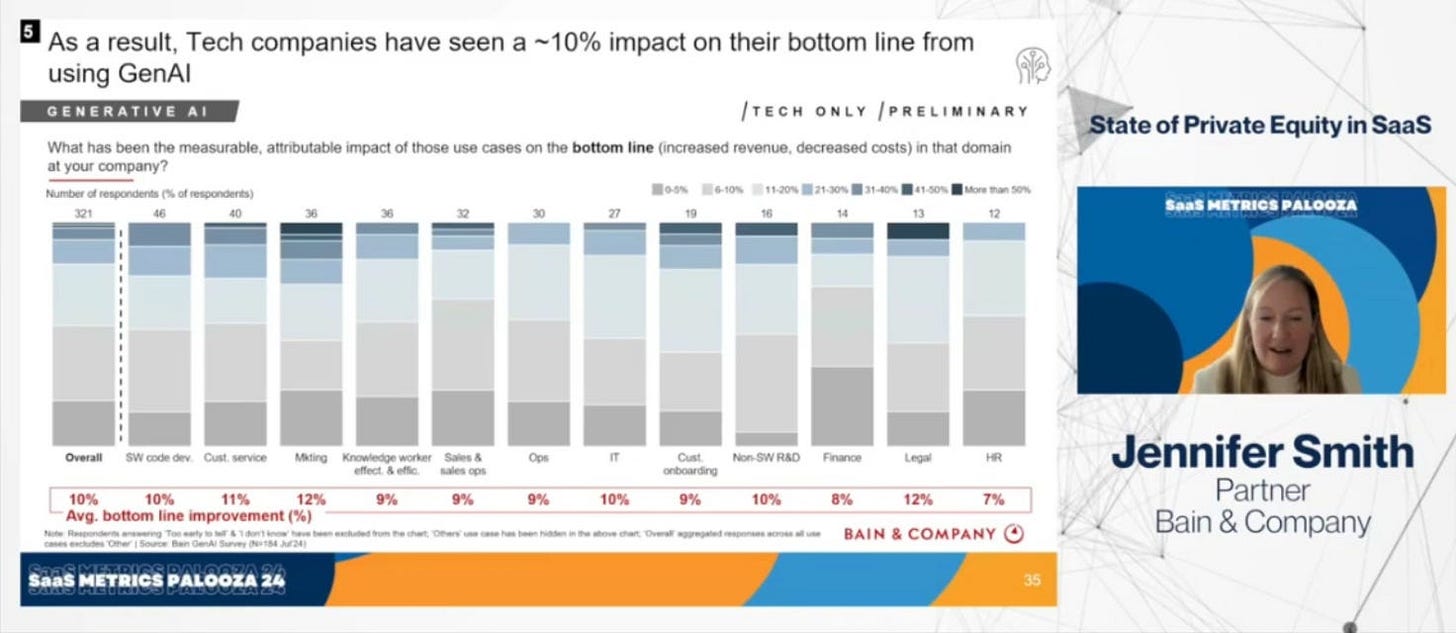

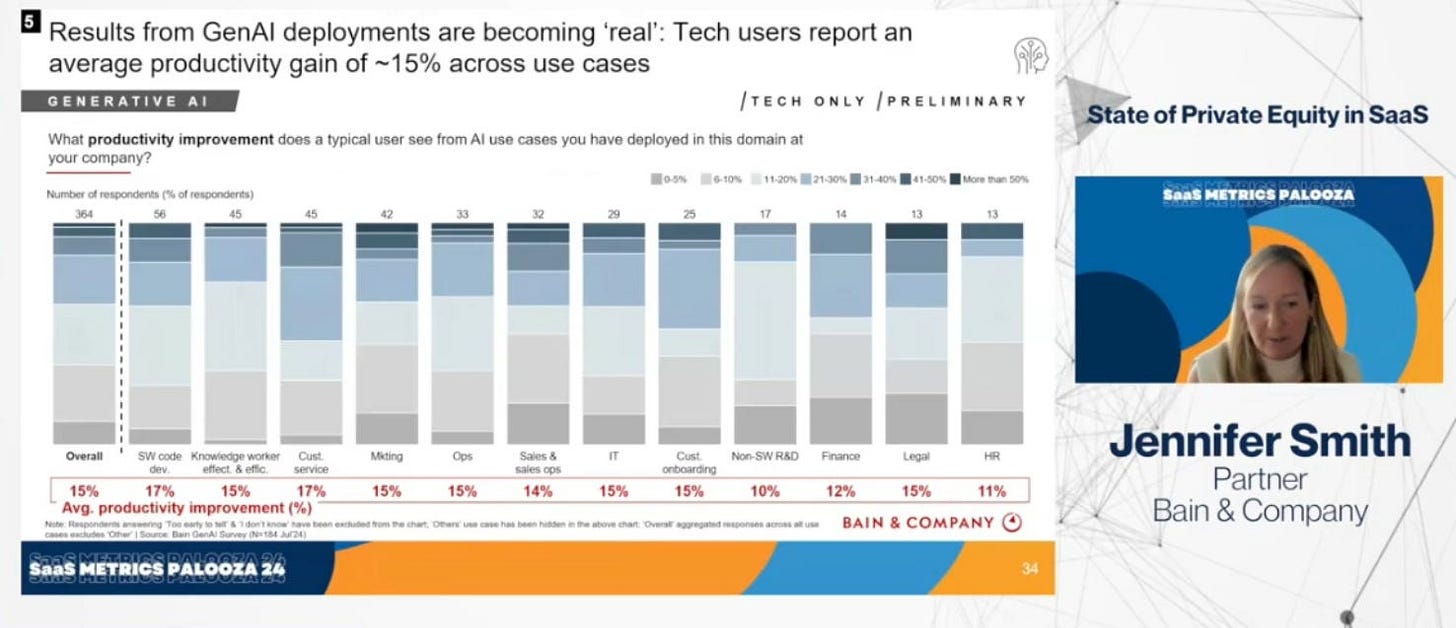

The early returns on AI ROI, *suggest* that *current* models are *sufficient* to justify the levels of AI enthusiasm in a *B2B* context.

See excerpts of ROI of AI studies from ICONIQ Growth, Morgan Stanley, ETR (Enterprise Technology Research), and Bain. With links + context in our earlier coverage here:

Having translated the AI initiative level returns to P&L impact (see margin improvement table here), AI ROI should clear any corporate investment hurdle rates.

Slowing AI Model Progress → *B2B* AI Read Through - Data + Costs

Beyond ROI, I would note that some of the factors noted in The Information article (like a data wall) do not necessarily map to B2B use cases (i.e., corporations have stores of data to use that are not available nor connected to the progress curve for ChatGPT style models).

And this internal corporate data can be “golden” - great context on data quality from Abraham Thomas:

“Quantity has a quality that’s all its own, but when it comes to training data, the converse is also true. ‘Data quality scales better than data size’: above a certain corpus size, the ROI from improving quality almost always outweighs that from increasing coverage. This suggests that golden data — data of exceptional quality for a given use case — is, well, golden.” - via “SaaSletter - AI In Finance And The Enterprise”

Relatedly, small language models are performing well in B2B contexts. More from Tomasz Tunguz and a Databricks report here: “Small But Mighty AI”

Declining model costs are a very powerful denominator driver in the AI ROI equation.

See this week’s “Welcome to LLMflation – LLM inference cost is going down fast” from Guido Appenzeller / Andreessen Horowitz, with the key takeaway of

In fact, the price decline in LLMs is even faster than that of compute cost during the PC revolution or bandwidth during the dotcom boom: For an LLM of equivalent performance, the cost is decreasing by 10x every year.

About Cloud Ratings

For our many new readers, we recently announced a research partnership with G2 - more here:

with this slide showing how our G2-enhanced Quadrants (like our recent Sales Compensation Software) release, this business of software newsletter you are reading, our podcasts, and our True ROI practice area all fit within our modern analyst firm: